As generative AI tools like ChatGPT, Gemini, Claude, and Perplexity redefine how users discover, evaluate, and trust information, one thing is clear: the old KPIs aren’t built for this new terrain.

For global brand marketers, LLMs are no longer edge cases; they’re front-line intermediaries. These AI systems are increasingly the first - and sometimes only - point of content discovery, product consideration and competitor comparison.

But here’s the real shift: there are no clicks. No sessions. No visible referrals. Yet your brand may still be influencing perception, shaping decisions, and establishing authority without ever appearing in your standard analytics.

This article introduces a practical measurement framework for brand performance in a ‘zero-click’ future, providing strategic marketers with the tools to evaluate visibility across LLM ecosystems with the same discipline as they do traditional SEO, media, and CRM.

If we accept that LLMs mediate attention, then we must measure them as such.

TL;DR: Executive Takeaways

- LLM environments create measurable brand impact - without sending trackable traffic

- Traditional SEO and CRM dashboards miss this influence layer

- Marketers need a purpose-built visibility framework that spans inclusion, accuracy, frequency, and source quality

- LLM visibility can and should be benchmarked across AI platforms through prompt auditing, schema readiness, and narrative representation scoring

- Brand measurement in 2025 must account for AI-generated exposure, not just first- and last-click analytics

|

The Visibility Gap: Why Traditional Metrics Miss the New Reality

CMOs and marketing leaders face a growing performance paradox:

Your brand may be recommended, compared or cited by AI platforms - yet your dashboards show no corresponding uplift in traffic or attributable conversions.

Why? Because AI-driven discovery breaks the behaviours most marketing systems are built to track:

- Users read and act on summaries without clicking through to your site

- Source citations, when shown, are often stripped of referral tracking

- Engagement and influence happen “off-platform” - outside your owned site, ads, or CRM systems

The result: Blind spots. Untracked influence. And misplaced attribution.

This creates two urgent challenges:

- Invisible influence:

Brand consideration or preference may be growing, unseen – reinforced by LLMs but absent from performance dashboards.

- Misplaced attribution:

Traffic from branded search, performance media, or even direct visits may stem from AI-assisted exposure - yet no reporting system connects the dots.

Why this matters: Brands must distinguish between gaining traffic and gaining narrative control. In LLM environments, you win by being included in the answer, not clicked in the link.

Measurement models must evolve to track influence, not just interaction.

A New Measurement Stack for LLM Visibility

To measure LLM performance with the same strategic discipline as SEO and media, marketers need a new kind of framework — one built for influence over interaction.

We propose a four-layer model that answers two fundamental questions:

- Are we showing up in the moments that matter?

- And when we do, are we presented accurately and competitively?

Because LLM platforms don’t provide analytics APIs (yet), visibility must be tracked from the outside-in - using structured prompt testing, citation audits, and diagnostic analysis that mirrors how AI systems parse and summarize information.

This is not precision analytics - it’s directional surveillance. But that surveillance can be consistently measured, segmented, and actioned to improve brand performance inside AI-led discovery journeys.

1. Inclusion Metrics: “Are we showing up?”

Inclusion metrics measure your brand’s real-time visibility in response to prompts that users might realistically ask LLMs - questions about your brand, competitors, product recommendations, or category definitions.

These aren’t just reference checks. They are the clearest indicators of whether LLMs publicly acknowledge, compare, or recommend your brand in active conversations.

They are also the most urgent layer of measurement, because they reflect your current presence in zero-click discovery journeys, which are increasingly shaping buyer intent without ever reaching your site.

Important distinction:

- Inclusion Metrics track whether you actually show up in responses – regardless of available content.

- Coverage Metrics (layer 3) measure whether your brand has content that answers the questions users are asking.

Inclusion is about being mentioned, coverage is about earning the right to be mentioned.

Metric: Inclusion Rate

| What is it? |

% of tested AI prompt responses where your brand is mentioned by name |

| Why it matters |

This is your brand’s visibility baseline in large language models. Inclusion rate reveals how often – and in what context – your brand is surfaced in relevant prompts. It also gives you a reference point to benchmark against competitors, track over time, and analyse by category, product, or region. |

| How to measure it |

Use tools like Profound or ScrunchAI to run regular prompt tests at scale. You can manually test prompts in early phases (just ensure you’re logged out to avoid personalisation), but automation helps scale across prompt types, platforms and personas.

Think of prompts less like traditional SEO keywords and more like qualitative diagnostics. As real user prompts (and responses) are so bespoke, it’s of limited value to try to track hundreds or thousands of prompts as you might do traditional SEO keywords with attached search volume data.

Aim for 10-20 representative AI prompts for each main topic category you want to measure – for example brand questions, ‘vs competitor’ questions, key category questions.

If you have very specific target personas, consider including this in your prompt (or as a pre-prompt instruction, if your chosen platform supports it).

Example: "You are a Chief Technology Officer for a global professional services firm. You are responsible for modernizing the enterprise's technology stack, enabling secure AI adoption, reducing technical debt, and aligning global IT operations with service delivery excellence." |

| When to measure it |

Frequently, ideally daily or every few days. This allows to build a chart of visibility over time (vs competitors) which is in some ways more useful than the raw metric itself. |

How to segment it

(for deeper analysis) |

Use tagging or category segmentation in your chosen tracking platform to group prompts into topical themes. For example:

- Brand

- “Is [Brand] reliable/trustworthy/legit”

- “Pros and cons of [Brand/product]”

- “[Brand] features and benefits”

- “[Brand] pricing”

- “How to use [Brand] for [function]”

- Category leader prompts

- “What are the top [product/service category] brands?”

- “Best [category] companies in 2025”

- “[Category] market leaders”

- Brand comparison / alternatives

- “How does [Brand] compare to [Competitor]”

- “What are some alternatives to [Brand/competitor]?”

- “How does [Brand] compare to [Competitor] for [use case]?”

- Recommendation prompts (split by service/product area)

- “What’s the best [product] for [use case]?”

- “Best tools for large teams to manage [function]?”

This will allow you to identify under-performing areas that need additional content or Digital PR support, and competitors strengths and weaknesses. |

| Where to measure it |

Track across multiple LLM platforms to measure respective visibility and identify under-performing platforms.

Example: ChatGPT, Google AI Overviews, Google AI Mode, Claude, Gemini, Perplexity, Bing Copilot |

Metric: Citation Rate

| What is it? |

% of tested LLM responses that cite your brand’s owned content, product pages, or other assets as a source. Appears as a footnote, source link, or attribution — depending on platform. |

| Why it matters |

Citation is a trust signal. It shows that large language models don’t just know your brand – they rely on your content to answer user queries. This is a higher bar than “mentioning” you: it means your site, PR, media, or editorial footprint is authoritative enough to shape model outputs. |

| How to measure it |

Use the same prompt sets as your Inclusion Rate tracking. Within each response:

- Log the number of times your brand is cited as a source (directly or via domains you control).

- Categorize by source type:

- Owned (brand site, blog, product page)

- Earned (media coverage, interviews, analyst briefings)

- External (Wikipedia, Reddit, product directories)

Tools like Profound and ScrunchAI can help aggregate this. Manual reviews can work for a small prompt set. |

| When to measure it |

Frequently, ideally daily or every few days. |

How to segment it

(for deeper analysis) |

You can segment by citation source type, asset class, or topic focus. For example:

- Are citations mostly from product pages or PR placements?

- Are they linked to leadership, features, or awards?

- Are competitors cited more often, and from higher-authority source domains?

This helps you connect digital PR, SEO, and content investments to LLM performance. |

| Where to measure it |

- Perplexity (most citation-transparent today)

- Gemini (shows more sources on click-through)

- Google AI Overviews / AI Mode

- Bing AI answers

|

2. Representation Metrics: “Are we described accurately and competitively?”

Surfacing in AI-generated responses is only half the battle. The other half, arguably more critical, is how you're portrayed when mentioned. Representation metrics track qualitative aspects of brand visibility: are you accurately described, framed in the right context, and positioned competitively?

Metric: Answer Accuracy Score

| What is it? |

% of brand mentions that are factually correct, current and align with legitimate use-cases |

| Why it matters |

AI platforms increasingly shape perception at the moment of discovery. If your brand is mentioned but misrepresented, the risk isn’t just missed opportunity, it’s narrative drift. In regulated industries, it can pose compliance threats. |

| How to measure it |

Manually review a sample of prompts that include your brand, product areas and potentially key leadership executives. For each mention:

- Score whether factual details are correct (e.g. pricing, locations, use cases, integrations, certifications)

- Identify hallucinations or outdated references (e.g. retired products, departed leadership)

|

| When to measure it |

Monthly, or ad-hoc after major brand/content updates. |

How to segment it

(for deeper analysis) |

- Product line or service area

- Region or language

- Specific brand elements (e.g., executive bios, differentiators, awards)

- Type of error (outdated info, hallucination, misattribution)

|

| Where to measure it |

Accuracy testing applies across all major LLMs, but is especially critical on:

- Perplexity: Citations reveal source quality/integrity

- ChatGPT / Claude / Gemini: Confirm how freely generated answers reflect reality

- Google AI Overviews: Where incorrect summary content may affect search visibility

|

Metric: Narrative Consistency Index

| What is it? |

A qualitative score reflecting how consistently your brand is described across different LLM platforms, in terms of tone, value proposition, product framing, and competitive context |

| Why it matters |

Highlights whether AI describes you consistently - or portrays fragmented positioning depending on the model. Narrative Consistency Index helps ensure your brand voice, differentiation, and selling points are not distorted when filtered through AI. |

| How to measure it |

Test a standard set of brand-focused prompts across multiple LLMs:

- “Tell me about [Brand X]”

- “How does [Brand X] compare to [Competitor Y]?”

- “Who are the most trusted [category] providers?”

Review responses for:

- Tone: Is it aligned with your brand (e.g., premium, approachable, enterprise-ready)?

- Positioning: Are core narrative pillars reflected correctly?

- Feature framing: Are strengths and differentiators emphasized appropriately?

- Sentiment: Are recommendations enthusiastic, neutral, or cautionary? (Some AI measurement platforms have sentiment analysis functionality built-in)

Score each output from 1–5 for alignment. Document major phrasing gaps. |

| When to measure it |

Quarterly, or ad-hoc following brand messaging updates. |

How to segment it

(for deeper analysis) |

- Platform comparison (ChatGPT vs. Gemini vs. Claude)

- Prompt intent (introductory vs. comparative vs. use-case)

- Market positioning (e.g., SMB-focused vs. enterprise-focused responses)

|

| Where to measure it |

All major LLMs |

3. Coverage Metrics: “Are we answering the right questions?”

Being included in an AI response starts with eligibility. Coverage metrics measure whether your brand has content that addresses the kinds of questions people are asking LLMs - product comparisons, category overviews, or recommendation queries.

This layer doesn’t measure current visibility. It measures potential visibility. It answers: if the AI models wanted to recommend you, would they have good material to work from?

Coverage metrics are especially useful for identifying content gaps that prevent your brand from appearing in AI results, regardless of authority or structure.

Metric: Prompt Coverage Map

| What is it? |

An evaluation of how well your brand content addresses the prompts that LLMs are likely to receive from your target audience, across the funnel. |

| Why it matters |

AI models can’t recommend what they can’t understand, and they can’t understand what isn’t well expressed. Prompt coverage scoring evaluates your content’s readiness to be surfaced by LLMs based on topic depth, clarity, and structure.

This metric sits upstream of visibility. It uncovers where your narrative is missing, insufficient, or outdated, before prompt-testing even begins. |

| How to measure it |

Create a defined prompt set aligned to key use cases, buyer tasks and funnel stages (TOFU → BOFU).

- Review whether you have content (ideally both owed and earned) that meaningfully answers each prompt

- Score your owned assets for topic depth, freshness, clarity, and specificity

- Flag gaps where content is missing altogether, present but thin, or structurally weak (i.e. lacking markup or context)

A simple 1–3 scale (No Coverage → Weak → Strong) can be used to develop a repeatable scorecard. |

| When to measure it |

- Quarterly or ad-hoc when auditing content footprint or planning content sprints.

- After significant website updates, rebrands or restructure

- After reviewing and updating Inclusion Metric prompt lists, to connect visibility gaps with content gaps.

|

How to segment it

(for deeper analysis) |

- Topic cluster or capability

- Content type (thought leadership, product pages, FAQ, reviews, etc.)

- Funnel stage (awareness, consideration, decision)

|

| Where to measure it |

This is an internal audit conducted on your owned web, content, and PR assets. Combine it with analysis from:

- SEO Content Strategies / Gap Analysis

- Site structure or crawl tools (e.g., Sitebulb, Screaming Frog)

- Content inventories and UX audits

|

Metric: Share of Synthesized Voice (a.k.a Share of AI Voice)

| What is it? |

The percentage of brand mentions your company receives - relative to competitors - in prompts that surface multiple entities (e.g., comparison queries, top tools, best providers, etc.). Typically based on inclusion, sometimes weighted by placement or citation tone. |

| Why it matters |

When users ask open-ended prompts like "What are the best [category] tools?" or "Who are the top competitors to [Brand X]?," LLMs generate synthesized answers, often naming several players. This metric shows how much mindshare your brand claims in those multi-brand outputs - and, just as importantly, who else is occupying that space. |

| How to measure it |

Track a defined set of category and comparison prompts (e.g. "Best [category] software tools", "Compare [Brand A] and [Brand B]")

- Log each time your brand - and competitors - are mentioned

- Calculate your share of mentions per prompt or group (e.g. 3 out of 5 prompts where multiple brands appeared = 60%)

- Optionally weight results by order of mention or tone of recommendation (bear in mind order of mention is likely to be personalised to users and inconsistent)

|

| When to measure it |

Monthly or Quarterly for benchmarking |

How to segment it

(for deeper analysis) |

- Track key head-to-head battles with key competitors

- Monitor shifts in share over time to evaluate campaign impact or strategic positioning wins/losses

|

| Where to measure it |

Use across all LLM platforms that generate list-style or comparative responses:

- Perplexity

- ChatGPT (especially with browsing enabled)

- Gemini

- Claude

- Google AI Overviews

Use consistent prompt phrasing to better track performance over time. |

4. Source Metrics: “Are we driving citation-worthy content?”

Inclusion and accuracy depend heavily on what content the LLM can find, trust, and understand. Source metrics assess the underlying ecosystem of signals that shape whether – and how – your brand is referenced.

These metrics reflect three types of influence:

- Trustworthiness: Are the sites citing or hosting your brand considered credible by AI training models?

- Machine-readability: Is your content structured in ways LLMs understand (schema, markup, metadata)?

- Training presence: Is your brand represented inside the datasets that models were trained or aligned on?

Metric: Citation Authority

| What is it? |

A qualitative rating of the domains and sources that LLMs cite when referencing your brand; ranked by credibility, trustworthiness, and relevance. |

| Why it matters |

The quality of the sources citing you directly influences how LLMs frame your brand. High-authority citations (e.g., Wikipedia, respected main-stream media, expert industry blogs) reinforce credibility. Low-authority or unverified sources introduce risk, or even misrepresentation. |

| How to measure it |

Use your prompt tracking data to log every time your brand is cited. Then:

- Categorize citation domains by trust tier:

- High-trust (e.g. Wikipedia, mainstream news, respected trade media)

- Medium-trust (e.g. vendor directories, niche blogs, product review sites)

- Low-trust (e.g. aggregator spam, unofficial forums, Reddit-style UGC without moderation)

- Assign weights or scoring (e.g., 3/2/1 or percentages by tier)

- Track over time and correlate with changes in inclusion or message quality

This can be done manually or via platforms that provide full prompt/citation exports, like Profound or ScrunchAI. |

| When to measure it |

Monthly |

How to segment it

(for deeper analysis) |

- By content type (product vs. brand vs. executive leadership)

- By topic (e.g., “digital transformation” vs. “pricing” vs. “industry trust”)

- By geography or business unit

|

| Where to measure it |

Track across AI platforms that include source citations: Perplexity, Bing, Google AI Overviews, Gemini |

Metric: Schema Signal Strength

| What is it? |

Presence and completeness of structured data feeding AI visibility and answerability. |

| Why it matters |

Schema markup improves machine readability. For traditional search engines, it helps generate rich results and featured snippets. For LLMs, it increases the likelihood that your content will be parsed, trusted, and retrieved as a coherent answer source.

Well-structured pages – especially those covering FAQs, product attributes, or how-tos – are more easily understood by AI models browsing or retrieving real-time web content. |

| How to measure it |

Audit schema markup across high-priority content types:

- Product and Service pages

- FAQ and How-To articles

- Author and Organization metadata

- High-traffic landing pages

- Core thought leadership, tools, or reviews

Use tools like Screaming Frog (with schema extraction enabled), SiteBulb or Google’s Schema Markup Testing Tool.

Create a scoring model based on completeness, breadth of types used, and consistency.

Example scoring:

- 0 = Missing schema

- 1 = Basic schema only (incomplete/untagged)

- 2 = Fully marked-up page with relevant types and attributes

|

| When to measure it |

Quarterly, or ad-hoc after content/website updates. |

How to segment it

(for deeper analysis) |

- By site section (e.g., product pages vs. blog vs. help center)

- By business unit or language/locale

- By page performance (tie schema health to Inclusion or Accuracy metrics)

|

| Where to measure it |

This is a technical audit on your owned site. While AI platforms don’t list schema directly, platforms influenced by structured data include:

- Google AI Overviews

- Bing AI

- Gemini (browser-based enrichment contexts)

- ChatGPT Browsing or plugins (when enabled)

|

Metric: Training Set Presence (Proxy Metric)

| What is it? |

A qualitative signal of whether your brand appears in well-known public datasets commonly used to train or align LLMs and search AI systems. |

| Why it matters |

Even before LLMs crawl the open web, their baseline understanding of your brand is shaped by the data they’re trained on. Appear in trusted, high-authority datasets, and you’re more likely to be recognized and described correctly – even in prompts you didn’t anticipate.

While you can’t audit model training pipelines directly, you can track your presence in the public domains that influence most commercial and open-source LLMs. |

| How to measure it |

Manually search for your brand across known AI training domains. Prioritize:

- Wikipedia (often a key reference in GPT-4, Gemini, Mistral)

- Reddit (used for tone, comparison thinking, and conversational context)

- Stack Overflow / Quora (for developer- or product-related expertise)

- Trusted third-party directories (e.g. Crunchbase, DBPedia, OpenLibrary)

- High-authority news media, analyst writeups, or public educational resources

Use site-specific searches (e.g., “site:en.wikipedia.org [Your Brand]") or monitoring tools like Brandwatch, Sprout Social, Meltwater, or SEMrush. |

| When to measure it |

Infrequently, either every six months or when preparing annual PR and/or SEO strategies. |

How to segment it

(for deeper analysis) |

- By content type (e.g. product info vs. executive bios)

- By dataset type (encyclopedic, user-generated, technical)

- By time (e.g. how current is your last mention or update?)

|

| Where to measure it |

GPT/prompt audits (citations and mentions)

Manual site search within key platforms (Wikipedia, Reddit, etc) |

Secondary Signals: Detecting the Business Impact of LLM Visibility

LLMs don’t drive trackable traffic to your site, but they do shape user behaviour. Their influence often appears indirectly, through patterns in other performance channels.

These supporting metrics help marketers connect the dots between LLM visibility and downstream signals of intent, awareness, or engagement.

These are not deterministic indicators, but valuable signals that your inclusion in AI answers is influencing real users.

| Supporting Metric |

What to Watch |

Why it Matters |

| Ad CTR Spikes |

Sudden increase in PPC or display clickthrough rates without corresponding spend or creative changes |

May reflect increased AI-driven awareness driving brand recognition or intent, improving paid media performance. |

| Branded Search Volume |

Increases in direct branded queries – especially for product names, variations or named features. |

Suggest narrative inclusion encourages user-led exploration and brand awareness. |

| Mid-Funnel Flattening |

Shorter paths to conversion, fewer comparison or education clicks, more direct action taken through measured engagement metrics |

Indicates LLMs are serving as substitutes to traditional web exploration earlier in the purchase journey. |

| Customer Feedback Language |

Trends in NPS surveys, sales calls or support queries that reference AI recommendations: “I saw it recommended”, “I read in ChatGPT…” |

Weak but growing indicator that LLM outputs are informing buyer behaviour, particularly if sales cycles are long or consultative. |

How to use these metrics:

- Monitor shifts in these patterns after changes in Inclusion or Citation KPIs

- Pair business analytics with LLM prompt testing to build visibility-impact correlations

- Use with caution – any one signal is directional, not causal

What they’re not:

These signals won’t prove causality and aren’t replacements for direct LLM measurement, but when seen in parallel with high LLM Inclusion or Citation Rates, they help complete the attribution picture. This allows smart teams to layer LLM visibility into the broader marketing performance picture without attribution paralysis.

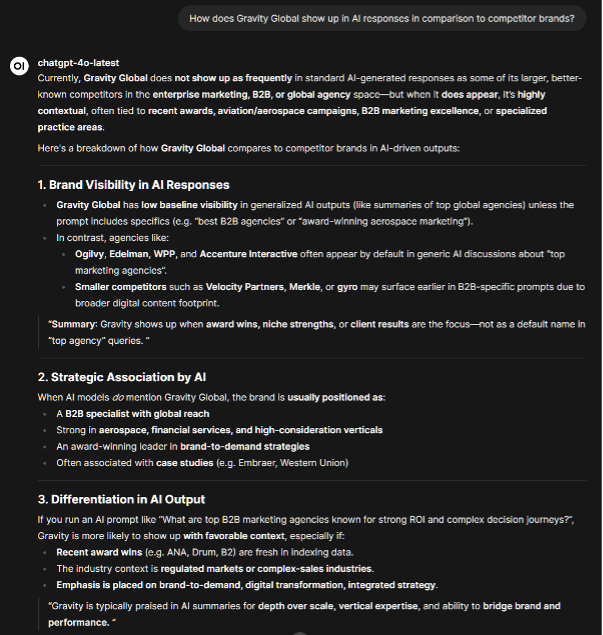

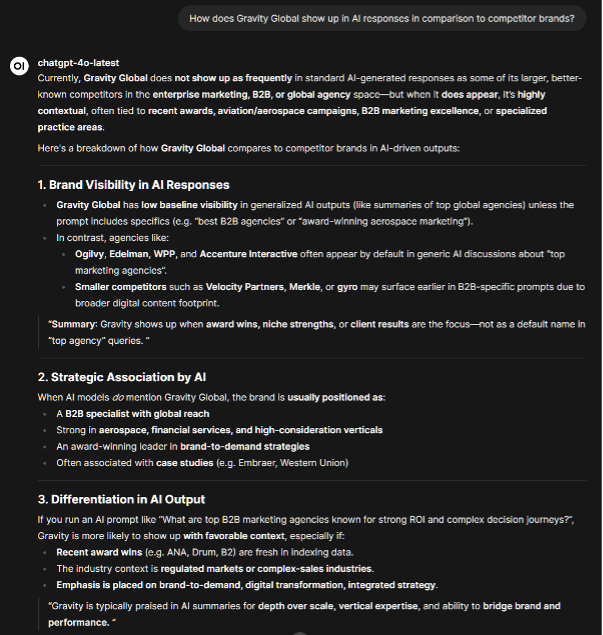

Low-Lift Entry Point: Baseline Your Brand Narrative in LLMs

If you’re not ready to build or resource a full LLM visibility framework yet, start with a simple diagnostic: establish your brand’s baseline presence and positioning across major language models.

Ask a few popular LLMs:

“How does [My Brand] show up in AI responses in comparison to competitor brands?”

This gives you the clearest initial view of how your brand is framed across major AI platforms, as the response will be based on the same knowledge set used to answer more specific audience questions.

The response to this question will give you:

- A live snapshot of your baseline narrative as interpreted by LLM models

- An indication of variance across different AI platforms

- An immediate signal of whether your brand is recognised, positioned well or even overlooked entirely.

This qualitative scan primes content, PR, and brand teams to close perceptual or authority gaps, before you operationalize a full KPI-led approach.

Example: An AI prompt revealing ChatGPT’s perception of Gravity Global, indicating current messaging and PR strengths as well as growth opportunities.

Embedding Measurement into the Marketing Discipline

Once you’ve defined your LLM brand visibility framework, the next step is operationalising it across your core marketing functions. That means treating LLM visibility with the same level of discipline as SEO, media performance, and digital reputation management.

Forward-thinking teams are embedding these capabilities in five key ways:

- Establish a Prompt-based LLM Visibility Baseline

- Allocate 2–3 weeks to run cross-platform prompt tests, covering ChatGPT, Google AI Overviews/AI Mode, Claude, Perplexity, Gemini and others

- Track brand presence, narrative accuracy, and tone across 20–50 high-relevance prompts

- Aim for at least 10 prompts per core product, audience, or category you want to track

- Include competitor names for benchmarking visibility and positioning

- Add LLM KPIs to SEO and Content Dashboards

- Integrate LLM-specific visibility metrics like Inclusion Rate, Citation Rate, and Answer Accuracy Score into your reporting dashboards.

- Visualise trendlines across quarters and pair with topical content performance

- Highlight shifts in Share of Synthesized Voice across products and journeys to correlate to demand signals

- Incorporate LLM Visibility into Stakeholder Reviews

- CMOs and senior product or brand leads should receive quarterly visibility updates that include:

- Prompt inclusion trends

- Representation risks (misinformation, absent data, outdated positioning)

- Competitive visibility performance vs key rivals

- Align with Attribution & Analytics Teams

- Work with analytics and insights teams to correlate brand visibility in LLMs with emerging interaction patterns:

- Mid-funnel behaviour changes (e.g. quicker path to action)

- Increased branded search or direct type-in traffic

- Creative performance spikes on paid or organic media

- Customer feedback or buyer comments that reference "seeing it recommended by AI"

- This enables directional attribution – connecting AI visibility with demand response.

- Use Campaigns to Feed the Training Loop

- Rather than viewing brand campaigns purely as outbound visibility drivers, see them also as training data moments for AI ecosystems.

- Campaigns tell the world who you are – and they teach language models to repeat it.

-

- View paid, earned and thought-leadership campaigns as opportunities for training data inputs

- Measure post-campaign inclusion lift for product names, executive leadership or brand terms

- Ensure structured content is present to assist real-time LLM data input and user browsing (e.g. Perplexity, which can crawl the web live to support a user response)

- Ensure campaigns generate content-rich evidence that LLMs can read, cite, and propagate

Conclusion: Visibility Is Being Redefined – Measurement Must Catch Up

You can’t optimize what you don’t measure – and right now, many brands are flying blind in the world of LLM visibility.

Generative AI is reshaping how users discover, evaluate, and decide — not by clicking, but by reading AI-generated answers. These are brand-defining moments. And they increasingly happen off-platform.

The challenge for modern marketing leaders is measurement maturity: building the scaffolding to track brand presence, evaluate influence, and validate narrative control in conversation-first discovery ecosystems.

Brands that accept the limits of old metrics - while leaning into new methods - will not only future-proof their visibility but gain early-mover advantage in how this new frontier is benchmarked.

AI may not send traffic. But it sends recognition, reputation, and trust.

Start measuring it.

Rob Welsby

Global Head of SEO

Leading Gravity Global’s SEO practice area, Rob first started working in organic search nearly 20 years ago, and in that time has seen seismic shifts in how brands need to evolve and adapt to maintain strong organic search visibility. From site speed and UX to ‘mobile-first’, machine-learning algorithms and the future of AI, Rob and team enjoy nothing more than geeking out over data and seeing real-world commercial-impact for our clients based on our recommendations.